A DRL approach for autonomous navigation that leverages context to improve performance in

unknown environments.

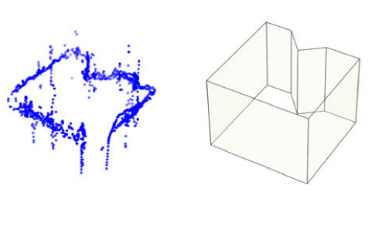

Mapless navigation refers to a challenging task where a mobile robot must rapidly

navigate to a predefined destination using its partial knowledge of the environment,

which is updated online along the way, instead of a prior map of the environment.

Inspired by the recent developments in deep reinforcement learning (DRL), we propose a

learning-based framework for mapless navigation, which employs a context-aware policy

network to achieve efficient decision-making (ie, maximize the likelihood of finding the

shortest route towards the target destination), especially in complex and large-scale

environments. Specifically, our robot learns to form a context of its belief over the

entire known area, which it uses to reason about long-term efficiency and sequence

show-term movements. Additionally, we propose a graph rarefaction algorithm to enable

more efficient decision-making in large-scale applications. We empirically demonstrate

that our approach reduces average travel time by up to 61.4% and average planning time

by up to 88.2% compared to benchmark planners (D* lite and BIT) on hundreds of test

scenarios. We also validate our approach both in high-fidelity Gazebo simulations as

well as on hardware, highlighting its promising applicability in the real world without

further training/tuning.